Every week, new AI tools and use cases hit the market. For branding and marketing teams, this can be an exciting prospect, as new ways to work and collaborate are discovered, leading to dramatic time and cost savings and turbocharged creative capacities.

At the same time, however, the rush to invest in or use free online AI solutions can backfire if care isn’t taken, with potentially huge consequences for teams and their businesses.

Amongst the new AI tools on the market, Generative AI (GenAI) is particularly important for brand marketing. As with the popular ChatGPT and Midjourney tools, GenAI allows users to describe tasks and let powerful computers get on with generating outcomes.

These could be AI generated images and brand assets, customer support messages, or new campaign ideas.

Forecasting suggests this market for GenAI is set to boom in the next decade. For brand teams interested in crafting iconic and trusted brands in the 2020s and beyond, the time for getting to grips with these technologies is now.

AI, brand reputation and trust

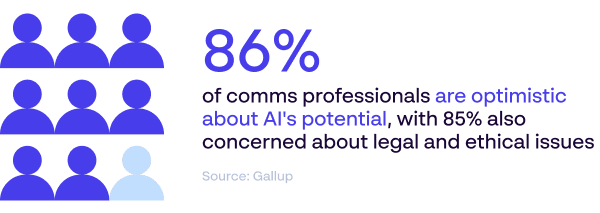

A survey of communications professionals found that, while almost 86% were optimistic about the potential of AI, 85% were also concerned about the legal and ethical issues.

AI adoption creates opportunities but also seeds new challenges, problems, pitfalls and risks. Customers are curious, but also anxious about what the implications of these new technologies will be for their lives.

Over the coming years, how companies use their AI tools will have a direct impact on their reputation, how much customers trust them, and how markets treat them.

Modern brands should be aiming to use these new technologies to create real value for customers, businesses and society. It starts with knowledge, understanding, and careful planning.

Establishing trust in uncertain times

Customer trust has long been understood to be at the core of successful branding. As consumers we simply like to spend our money with brands that we believe in. Research also shows that customers who trust a brand are three times as likely to forgive product or service mistakes.

When it comes to adopting AI tools, it’s therefore important to ask yourself the question – is our company using AI in a way that builds customer trust? Or could our choices be doing the opposite?

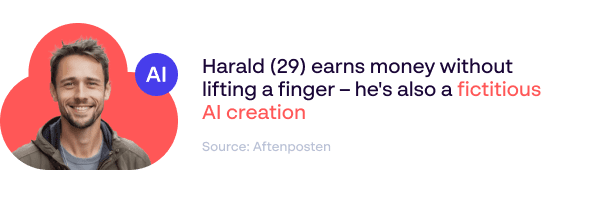

Sparebank found this out the hard way, when it came to light that the Norwegian bank had used an AI generated image without being labelled as such.

This broke legislation on misleading marketing, which requires that subjects used in ads be real users of the product or service. It also potentially contravened Norwegian regulations on image manipulation, which require that images that have been airbrushed or edited are clearly marked in order to reduce pressure that could lead to shame or body dysmorphia.

The result was a media storm, in which Sparebank were forced to publicly admit their mistake and promise to take more responsibility in future.

The lesson? New capacities created by AI tools might seem great on paper, saving time and money and helping to bring new creative ideas to life. However, if they contravene legislation or prevailing social norms, the best intentions can quickly backfire.

Respecting privacy with AI technologies

How many people are currently using ChatGPT at work, unaware that information entered into its prompt box is technically in the public domain?

With most companies building their AI tools on the back of third-party machine learning algorithms, complex issues are raised around data protection and privacy. Without proper assessment and training, well-meaning employees may end up breaching GDPR and other data-protection regulations without realising.

Until regulators and legislators catch up with AI technologies and provide clear and unambiguous guidelines, this is a potential minefield for brand reputation.

Companies need to take care not to intrude into their customer and employee’s private lives in ways that overstep reasonable boundaries.

Consider that, as tools get more powerful, brands will be able to advertise and persuade us with increasingly subtle and powerful strategies. Where is the line drawn between personalised, data-driven marketing and outright manipulation?

Or consider that there is at least one AI wellbeing tool in development that purports to allow companies to track productivity alongside employee wellbeing. All good – but what if the algorithm shows that employee productivity drops beyond a certain degree of wellbeing?

These might be speculations, but they could very soon become realities. As the famous theorist Paul Virilio once remarked, “the invention of the ship was also the invention of the shipwreck.”

Companies need to tread carefully to ensure that good intentions don’t accidentally lead to intrusive or manipulative practices, which, once publicly exposed, will meet with an understandable and expected backlash.

Implementing ethical AI solutions

With all this said, what can companies do to minimise the risk and maximise the value that AI can contribute to customers, employees, and society?

We can begin with a simple principle of humility. Despite our best attempts to guess, no-one knows for certain what the impact of AI will be. As we saw with Sparebank, what likely began as a reasonable business intention – “let’s use these new tools to save time and money” – quickly turned into a public scandal.

Sparebank quickly admitted it got it wrong, which may in the long run work to its favour. In times of uncertainty and change, transparency and honesty go a long way towards (re)building trust.

Brand teams should keep this in mind. Over the coming years, more companies are likely to have their reputations tested as they experiment with AI technologies. The most successful will find ways to innovate, while maintaining respect for their customers and sensitivity to when ethical lines are crossed.

Creating an ethical charter is one way that companies can ensure their intentions are aligned with positive societal outcomes. An ethical charter defines clear values for how AI should be used, providing a framework for decision making when boundaries get murky and regulations aren’t much use.

Papirfly’s ethical charter, for example, covers four major principles:

- Be a good corporate citizen when it comes to the rightful privacy of our users

- Ensure we act in an unbiased manner – always – as we’d expect to be treated too

- Build in the highest level of explainability possible, because output is important

- Overall, our task is simple – we must build technology that is designed to do good

Within each of these principles are further specific guidelines for how AI should be built and used within our business.

Naturally, ethical charters will vary from company to company to reflect their specific needs and markets. The aim should be to create a strong company culture, laying the foundations for ethical decision making and a reputation that customers can always trust.

Towards an AI powered future

Artificial intelligence depends on responsible humans making clear decisions within strong ethical frameworks.

To learn about how Papirfly is ethically innovating the challenges of branding and AI, check out these links.

At Papirfly, we are committed to using AI to enhance every user’s experience, all while continuing to empower the world’s biggest brands with our all-in-one brand management platform.

Learn about how Papirfly is ethically innovating the challenges of branding and AI.

Table of contents: